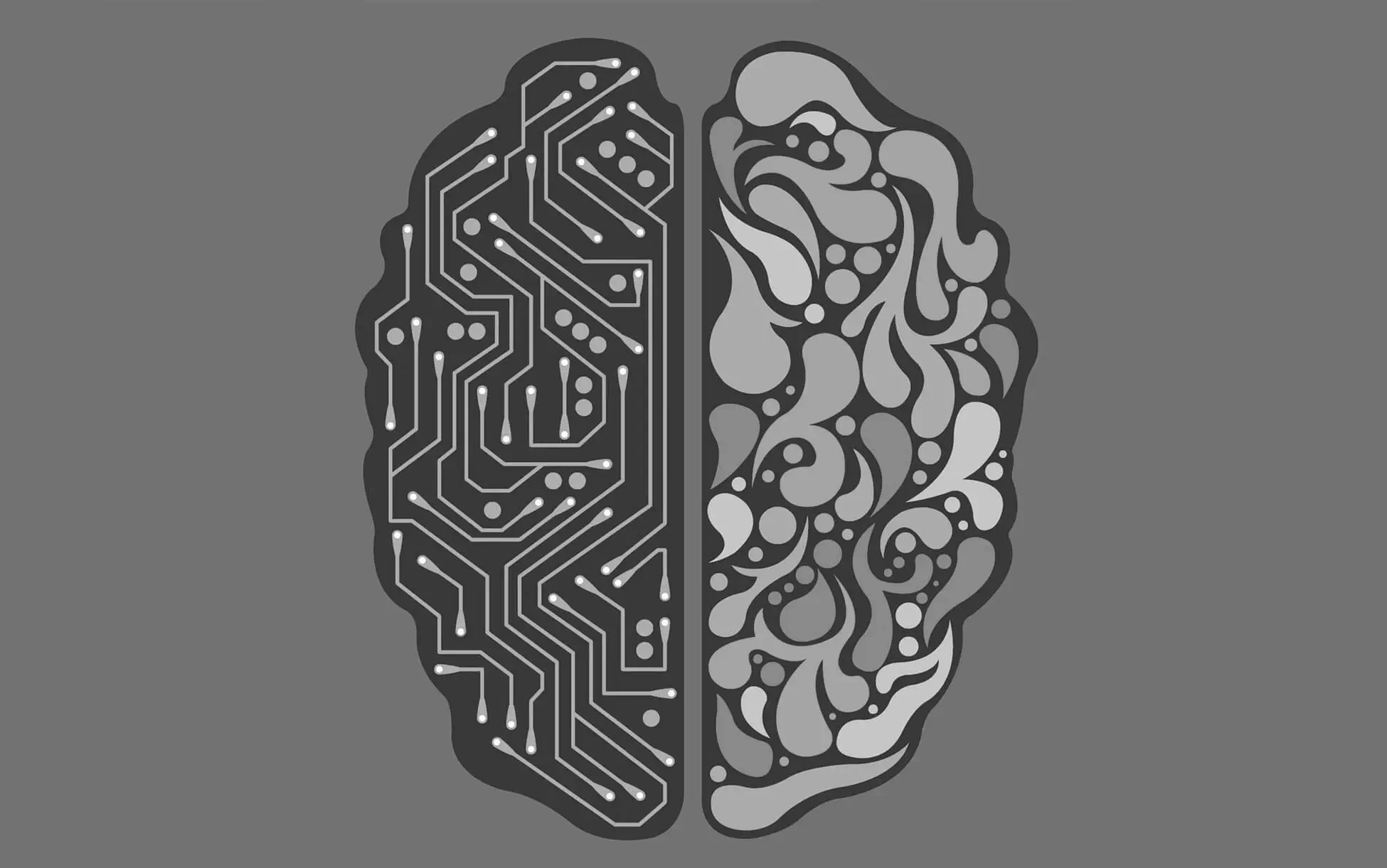

Data Tagging: The process of converting raw data into a format that is easy to analyze and visualize

Benefits of Data Tagging

Data tagging reduces the time and money spent on secondary data analysis that helps in decision-making in organizations

All tags are applied manually and are recorded in the system to enable easier data cleaning. Accurate and relevant data tags enhance model precision, reducing errors in AI-driven tasks and applications.

The nature of the data and its purpose are identified before careful application of tags. AI can use tags to personalize content recommendations, tailoring user experiences based on preferences and behavior.

Error-free and robust solutions are used in every step to ensure accuracy. Foiwe’s process oriented data tagging services is driven by strict data quality control ensuring the accuracy and consistency of labeled datasets.

Items Moderated each day

Empowering your business with Individualized solution

While a few platforms are tackling the issues related to content moderation, others are still in the process of determining their starting point. In contrast, we have already successfully implemented it. Experience our AI content moderation at its finest with ContentAnalyzer.

With your dedicated account manager, as a single point of contact and accessible round the clock over the phone or messenger, you get a personalized support and swift communication literally in real time. We aim at seamless problem-solving, enhancing overall satisfaction on our service delivery and partnership effectiveness through continuous communication across multiple channels.

Applications and Capabilities

Data Tagging also helps in machine learning and in particular it is useful for large machine learning tasks which in turn helps in providing better quality solutions to problems faced by users.

Applications

- Training AI

- Social Platforms

- Audio and Video Applications

- Ecommerce platforms

Capabilities

- Capable to handle large data volumes

- Multilingual team

- Experienced Staffs for greater output

- Data Tagging functions as a clear indexing solution in machine learning.

Speak with our subject matter experts

Data Tagging as a service

Need for an accurate Data Tagging

Data tagging is a big part of the data preparation, especially for machine learning applications. Data tagging is the process of converting raw data into a format that is easy to analyze and visualize. Data tagging is used to reduce the time needed for data analysis by reducing unneeded fields from data sets and by making the analysis more robust. Data tagging can also greatly speed up data preparation and manipulation which are also useful to improve machine learning performance.

Foiwe ensures proper and smooth completion and distribution of data sets. We also provide quality assurance for data analysis. Our tagging process ensures that the quality parameters have been met and that the output is reliable.

Related Services

Service Offerings Related to Data Tagging and Catalogue Management

Case Studies and Reports

Short Videos

Human Intelligence

Our Trust & Safety...

What is Data Tagging?

Types of Data Tagging

Why is Data Tagging Important?

Challenges in Data Tagging

What is Data Tagging in media?

Blog Articles

For important updates, news, and resources.